Whereas everybody’s been buzzing about AI brokers and automation, AMD and Johns Hopkins College have been engaged on bettering how people and AI collaborate in analysis. Their new open-source framework, Agent Laboratory, is a whole reimagining of how scientific analysis may be accelerated via human-AI teamwork.

After quite a few AI analysis frameworks, Agent Laboratory stands out for its sensible method. As a substitute of making an attempt to interchange human researchers (like many current options), it focuses on supercharging their capabilities by dealing with the time-consuming elements of analysis whereas holding people within the driver’s seat.

The core innovation right here is straightforward however highly effective: Somewhat than pursuing absolutely autonomous analysis (which frequently results in questionable outcomes), Agent Laboratory creates a digital lab the place a number of specialised AI brokers work collectively, every dealing with totally different elements of the analysis course of whereas staying anchored to human steerage.

Breaking Down the Digital Lab

Consider Agent Laboratory as a well-orchestrated analysis group, however with AI brokers taking part in specialised roles. Identical to an actual analysis lab, every agent has particular obligations and experience:

A PhD agent tackles literature critiques and analysis planningPostdoc brokers assist refine experimental approachesML Engineer brokers deal with the technical implementationProfessor brokers consider and rating analysis outputs

What makes this method notably attention-grabbing is its workflow. Not like conventional AI instruments that function in isolation, Agent Laboratory creates a collaborative atmosphere the place these brokers work together and construct upon one another’s work.

The method follows a pure analysis development:

Literature Evaluation: The PhD agent scours educational papers utilizing the arXiv API, gathering and organizing related researchPlan Formulation: PhD and postdoc brokers group as much as create detailed analysis plansImplementation: ML Engineer brokers write and check codeAnalysis & Documentation: The group works collectively to interpret outcomes and generate complete reviews

However here is the place it will get actually sensible: The framework is compute-flexible, that means researchers can allocate assets primarily based on their entry to computing energy and price range constraints. This makes it a device designed for real-world analysis environments.

Schmidgall et al.

The Human Issue: The place AI Meets Experience

Whereas Agent Laboratory packs spectacular automation capabilities, the actual magic occurs in what they name “co-pilot mode.” On this setup, researchers can present suggestions at every stage of the method, creating a real collaboration between human experience and AI help.

The co-pilot suggestions information reveals some compelling insights. Within the autonomous mode, Agent Laboratory-generated papers scored a mean of three.8/10 in human evaluations. However when researchers engaged in co-pilot mode, these scores jumped to 4.38/10. What is especially attention-grabbing is the place these enhancements confirmed up – papers scored considerably increased in readability (+0.23) and presentation (+0.33).

However right here is the fact test: even with human involvement, these papers nonetheless scored about 1.45 factors under the common accepted NeurIPS paper (which sits at 5.85). This isn’t a failure, however it’s a essential studying about how AI and human experience want to enrich one another.

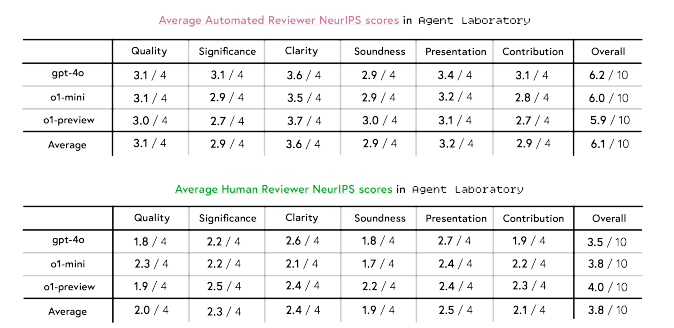

The analysis revealed one thing else fascinating: AI reviewers persistently rated papers about 2.3 factors increased than human reviewers. This hole highlights why human oversight stays essential in analysis analysis.

Schmidgall et al.

Breaking Down the Numbers

What actually issues in a analysis atmosphere? The associated fee and efficiency. Agent Laboratory’s method to mannequin comparability reveals some stunning effectivity good points on this regard.

GPT-4o emerged because the velocity champion, finishing your complete workflow in simply 1,165.4 seconds – that is 3.2x sooner than o1-mini and 5.3x sooner than o1-preview. However what’s much more vital is that it solely prices $2.33 per paper. In comparison with earlier autonomous analysis strategies that price round $15, we’re an 84% price discount.

Taking a look at mannequin efficiency:

o1-preview scored highest in usefulness and clarityo1-mini achieved the most effective experimental high quality scoresGPT-4o lagged in metrics however led in cost-efficiency

The true-world implications listed here are important.

Researchers can now select their method primarily based on their particular wants:

Want speedy prototyping? GPT-4o affords velocity and price efficiencyPrioritizing experimental high quality? o1-mini is likely to be your finest betLooking for essentially the most polished output? o1-preview exhibits promise

This flexibility means analysis groups can adapt the framework to their assets and necessities, moderately than being locked right into a one-size-fits-all resolution.

A New Chapter in Analysis

After trying into Agent Laboratory’s capabilities and outcomes, I’m satisfied that we’re a big shift in how analysis shall be carried out. However it’s not the narrative of alternative that always dominates headlines – it’s one thing way more nuanced and highly effective.

Whereas Agent Laboratory’s papers are usually not but hitting prime convention requirements on their very own, they’re creating a brand new paradigm for analysis acceleration. Consider it like having a group of AI analysis assistants who by no means sleep, every specializing in numerous elements of the scientific course of.

The implications for researchers are profound:

Time spent on literature critiques and primary coding may very well be redirected to artistic ideationResearch concepts which may have been shelved resulting from useful resource constraints change into viableThe capability to quickly prototype and check hypotheses may result in sooner breakthroughs

Present limitations, just like the hole between AI and human evaluation scores, are alternatives. Every iteration of those programs brings us nearer to extra subtle analysis collaboration between people and AI.

Trying forward, I see three key developments that might reshape scientific discovery:

Extra subtle human-AI collaboration patterns will emerge as researchers be taught to leverage these instruments effectivelyThe price and time financial savings may democratize analysis, permitting smaller labs and establishments to pursue extra bold projectsThe speedy prototyping capabilities may result in extra experimental approaches in analysis

The important thing to maximizing this potential? Understanding that Agent Laboratory and related frameworks are instruments for amplification, not automation. The way forward for analysis is not about selecting between human experience and AI capabilities – it is about discovering revolutionary methods to mix them.